Understanding Scores

Evolve captures a wide number of separate metrics in order to evaluate the performance of your GPU. The metrics are categorized into scores. The exact scores being captured depend on the capabilities of your device and the type of benchmark run. The following scores can be produced:

- Raytracing (Not included unless “Mode” is set to pipeline or inline)

- Rasterization ( Not included unless “Mode” is set to pipeline or inline. Not included unless “Renderer” is set to “Hybrid”)

- Compute (Not included unless “Mode” is set to pipeline or inline)

- Workgraph (Not included unless “Mode” is set to workgraphs (path tracer exclusive))

- Acceleration Structure Builds

- Driver

- Energy

For more details on how each of these scores are established, see the following sections. Scores that can be sub-divided to individual GPU passes, the section on each score details the “render passes” that fall under the respective score.

Raytracing

The Raytracing score is the score formed from all passes that perform hardware accelerated raytracing through the respective Vulkan Raytracing and DXR APIs. This score can be highly dependent on the trace mode, inline or pipeline, and the type of renderer, hybrid or path tracing. See also the sections on Tracing Modes and Renderers.

Note that when the “Renderer” is set to “Path Tracing”, the only render pass that may be ran is “Wavefront Pathtracing”.

Global Illumination Tracing

These passes shoot rays sampling the Global Illumination of the scene, used to fill the irradiance cache.

Reflections Tracing

These passes trace rays from surfaces that have reflective properties such as metals, or the reflective coating on leaves.

Shadow Tracing

These passes trace rays to check for light occlusion, eg. figuring out which objects are being shadowed from the sun, and which objects aren’t.

Wavefront Pathtracing

These passes trace rays simulating the light as it travels throughout the scene. This will only be run when “Renderer” is set to “Path Tracing”.

Transparency and Translucency Tracing

These passes trace refracted rays through transparent surfaces such as glass.

Water tracing

These passes trace rays from water surfaces to calculate reflected light.

Rasterization

The Rasterization score is the score from all passes that perform operations involving the hardware rasterizer, converting triangles to pixels. This is not active in the “Path Tracer”, as all the output is formed through raytracing. See also the section on Renderers.

Rasterization Culling

These passes cull geometry that is not visible on the screen to reduce the amount of rasterization work.

Visibility Buffer

These passes render geometry using the rasterizer, primarily for creation of visibility buffers used for Raytracing.

Deferred

These passes use the rasterizer to primarily build g-buffers from the visibility buffers for raytracing and composite the final frame.

Compute

The Compute score is the score from operations on the GPU such as preparing for rendering, animations and wind physics. Due to it involving both rendering-related operations as well as other game-like logic, this score encompasses a larger set than most other scores.

Post

These passes include effects such as depth of field, eye adaptation, Temporal Anti-Aliasing (TAA), and upscaling.

World Update

These passes perform animations, streaming and book-keeping so that data is available for rendering.

Water Resolve

These passes are responsible for compositing the results from water reflection and refraction in the final image.

Global Illumination Resolve

These passes calculate the Global Illumination of the scene by sampling the irradiance cache and processing the results using ReSTIR.

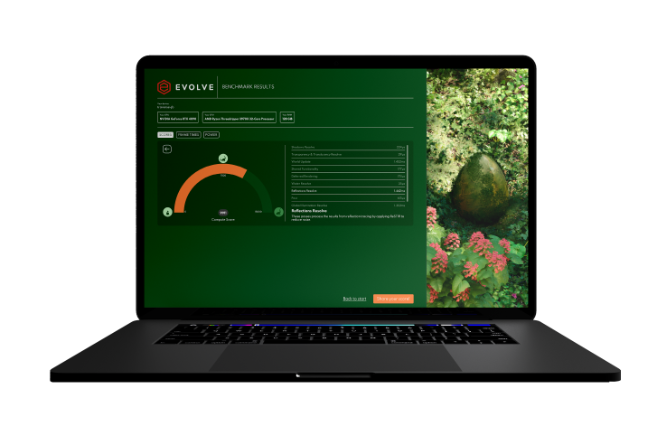

Reflections Resolve

These passes process the results from reflection tracing by applying ReSTIR to reduce noise.

Shadows Resolve

These passes process the shadow hit results. They apply additional processing for soft shadows.

Transparency and Translucency Resolve

These passes do additional processing for translucent geometry such as particles.

Workgraph

The Workgraph score captures all shading and computation operations performed on the GPU, except Acceleration Structure Builds. In a run that has “Mode” set to “Workgraph”, all GPU work normally categorized under “Raytracing”, “Rasterization” and “Compute” scores is now dispatched in one single batch through the new “Workgraphs” feature. The resulting score is a combination of all aforementioned scores.

Pathtracing

These passes trace rays simulating the light as it travels throughout the scene.

Acceleration Structure Build

The Acceleration Structure Build score is the score from GPU operations that build the necessary dependencies for performing Raytracing operations. The algorithm for building these dependencies is highly dependent on the vendor.

Build Blas

Driver pass that builds the bottom level acceleration structures used for ray tracing

Build Tlas

Driver pass that builds the top level acceleration structures used for ray tracing

Refit Blas

Driver pass that refits the bottom level acceleration structures used for ray tracing

Driver

The Driver score is the score from operations issued on the CPU side for the graphics driver to complete. As of now, this captures operations for creating and updating Acceleration Structures specifically. This score can be influenced by the speed of your CPU, but will also capture stalls induced by the graphics driver on the CPU side.

Energy

The Energy score is a measurement of energy consumption of the GPU, which is derived from the measurements as provided by the hardware vendor. You may therefore see differences between graphics cards from different vendors. Since power usage can differ based on the selected “Tracing Mode” and “Renderer”, these can also influence the energy score to some degree. See also the sections on Tracing Modes and Renderers for more details.

Recommended Testing Procedure

Benchmarking is a complex process to get right, since there are loads of factors that go into getting a repeatable run in place.

There are some rough guidelines but they’re still subject to the platform they’re being run on. For example, after a few runs the platform may clock down a device to reduce its operating temperature. A device may “boost clock” for a while during a benchmark run or a device can change it’s voltage and power draw dynamically during a run.

To control for as many variables as possible make sure to test in identical conditions, and keep an eye on temperature, core and memory clock frequencies, voltage and wattage consumed during a run.

There are a few key principles that help reduce run-to-run variance:

- Make sure that the device is plugged in where applicable (laptop, tablet, phone etc).

- Make sure the device is in a well cooled environment and of a consistent temperature from run to run.

- Make sure there are no other applications running on the device at the same time.

- If consistent runs are mandatory for your use case, but peak performance doesn’t matter, consider either pinning the clocks directly through vendor tools, or use a tool like StablePowerState to force a device to a lower, but sustained clock speed.

Rules

Evolve as a benchmark is expected to produce unbiased, accurate and repeatable results, therefore we need clear rules about what is allowed on our benchmark and what isn’t.

- The platform must run EVOLVE as if it’s any other application.

- Benchmark specific optimizations are not allowed and are expected to be contributed back to the EVOLVE codebase where they might serve a better life.

- Contributions back to the EVOLVE codebase should not make other platforms perform worse than without these changes applied.

- The platform should not perform, render pass replacement, shader replacement or any other kind of manual substitution.

- The platform may not replace or remove any portion of the workload.

- Optimizations that change the visual output of the benchmark are not allowed.

- Optimizations based on empirical data of the benchmark workload is not allowed.

- We recognise that certain optimizations are beneficial to the broader public when they’re incorporated into the platform (for example, scalar execution of atomic operations), when these optimizations can be made available to a wider audience these are allowed.

Application specific optimizations

In general it is allowed to perform app specific optimizations to EVOLVE as long as they respect these rules.

We recognize that app-opt / app-compat exists and is a vital part to the operation of a platform, however, if app-opts are enabled for EVOLVE they should be disclosed to the EVOLVE development team and they should adhere to the previous rules.

Valid benchmark results

A valid benchmark run will constitute a benchmark run that has been run on a WHQL signed driver that adheres to the above rules, and doesn’t produce any visual artifacts during the run (for example, due to driver or application bugs).

Advanced

Benchmarking